🚀 Master Databricks Data Engineering: Your Step-by-Step Roadmap to the Lakehouse

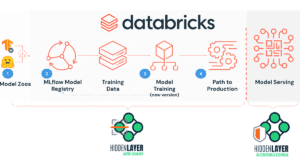

In the age of big data, the ability to process, manage, and optimize massive datasets is not just a skill—it’s a superpower. This is the domain of the Databricks Data Engineer. Databricks stands out as the ultimate unified analytics platform, merging the best of data warehouses and data lakes into a powerful Lakehouse architecture for analytics, machine learning, and AI. This guide breaks down the essential concepts, tools, and roadmap you need to become an expert in cloud-based data engineering using Databricks.

📺 Deep Dive into Databricks Data Engineering

This video provides an excellent, structured overview of what Databricks is and the core responsibilities of a Data Engineer:

Don’t miss future sessions! Hit the subscribe button and click the bell 🔔 to get notified!

📝 What’s On Deck: Deconstructing the Databricks Platform

⚙️ Key Concepts & Platform Definitions

Before diving into the “how,” it’s crucial to understand the foundational “what.”

- Databricks Defined: It is a cutting-edge cloud computing platform and environment designed for implementing Data Warehouses (DWH) and advanced analytics [00:01:54].

- Data Storage Scope: Databricks is built to handle any type(e.g., barcodes, QR codes) and any amount (Terabytes to Petabytes) of data seamlessly [00:00:46].

- DWH vs. OLTP:

- OLTP (Online Transaction Processing): Designed for storing live, real-time transactional data [00:01:16].

- Data Warehouse (DWH): Designed for storing historical, inactive data for long-term analysis. This is the primary focus for a Data Engineer [00:01:30].

🛣️ The Data Engineer’s Core Role: ETL in the Cloud

A Data Engineer’s mission is to make data usable, which primarily involves the Extract, Transform, and Load (ETL) process.

- Core Mandate: To design, build, manage, and optimize data warehouses in the cloud [00:02:59].

- The ETL Process:

- Extract: Reading various types of raw data (files, databases, applications) [00:04:42].

- Transform: Shaping, formatting, filtering, and mashing up the data to ensure it’s clean and ready for analysis [00:03:56].

- Load: Delivering the processed, high-quality data to the final destination, which can be a direct Warehouse or, more commonly, a Lakehouse [00:04:05].

- Involved Activities: This role includes implementing ETL, cloud storage solutions, security measures, and performance optimizations [00:03:21].

💻 Essential Tech Stack & Advanced Concepts

To succeed on the Databricks platform, you must master the underlying technologies and architectural patterns [00:06:06]:

- Core Languages & Frameworks: You must be proficient in SparkSQL, Spark, Python, PySpark, and Scala.

- Modern Architecture: Understanding the Lambda Architectureand Unity Catalog for centralized data governance is key [00:06:21].

- Operational Tools: Expertise in Databricks Notebooks, Jobs, Workflows, and CI/CD (Continuous Integration/Continuous Deployment) is required for productionizing data pipelines.

- The Full Journey: The recommended learning path starts with strong SQL fundamentals, progresses to Python, and then moves to the specific Databricks Data Engineering** modules [00:07:08].

💡 Hands-On Challenge: The Core ETL Transformation

Challenge: You need to transform a raw customer data file into a clean, ready-for-analytics Delta Lake table using Databricks’ core process. How do you start the Transformation step?

Hint: Quick Tip

The “T” (Transform) in ETL is best handled on Databricks using the distributed computing power of **Spark**. Your transformation logic will be written using a Databricks-preferred language for massive data processing.

Solution: Detailed Answer

You would primarily use a PySpark DataFrame within a Databricks Notebook. After extracting the data (e.g., from a raw storage path) into a DataFrame, you apply transformations such as:

- Selecting required columns.

- Filtering out bad records (e.g., null values).

- Casting columns to the correct data types.

The final step is to load (write) the clean DataFrame to a Delta Lake table.

Conceptual PySpark Code Snippet for Transformation:

# 1. Read the extracted data into a Spark DataFrame

raw_df = spark.read.format("csv").load("abfss://raw@datalake.dfs.core.windows.net/data.csv")

# 2. Transformation (Select, Clean, and Rename)

transformed_df = raw_df.select(

col("_c0").alias("customer_id").cast("int"),

col("_c1").alias("full_name"),

col("_c2").alias("join_date").cast("date")

).filter(col("customer_id").isNotNull()) # Simple filter

# 3. Load (Write) to the Lakehouse

transformed_df.write.format("delta").mode("overwrite").saveAsTable("analytics.customer_master")🌟 Why This Matters: Securing Your Future in Tech

The concepts covered by the Databricks platform are not merely academic—they represent the future of cloud-scale data management. By mastering these skills, you are positioning yourself for prominent, in-demand job roles. Databricks caters to Data Engineers, Data Scientists, Data Analysts, and AI Engineers [00:02:04]. Acquiring this specific expertise, including concepts like the Lakehouse, Spark, and dedicated Databricks workflows, provides a direct path to recognized industry certifications and high-value project work. This is the skill set required to bridge raw data with AI-driven insights.

❓ Frequently Asked Questions (FAQs)

What is the main difference between OLTP and Data Warehouse?

OLTP (Online Transaction Processing) systems are built for live, high-speed, concurrent transactions (e.g., checkout systems). A Data Warehouse (DWH) is designed for historical data storage and complex analytical queries, focusing on long-term insight rather than transaction speed [00:01:30].

Which programming languages are critical for a Databricks Data Engineer?

The most critical languages are Python (specifically PySpark) and SQL (specifically SparkSQL). Python is used for complex transformations and data science tasks, while SQL is essential for querying and managing data in the Delta Lake tables. Scala is also highly valued for its performance [00:06:06].

What is the Lakehouse Architecture mentioned in the video?

The Lakehouse is an architecture that uses a platform like Databricks and an open-source format like Delta Lake to combine the best features of a Data Lake (low-cost storage, variety of data types) and a Data Warehouse (data structure, governance, and ACID transactions). It’s the modern destination site for processed data [00:04:05].

🎯 Summary Snapshot Table

| Focus Area | Key Takeaway | Your Action Step |

|---|---|---|

| Databricks Platform | Unified cloud environment for DWH, analytics, and AI. Handles any data volume. | Create a free Databricks Community Edition workspace to begin hands-on practice. |

| Data Engineer’s Goal | Design and optimize cloud ETL/Lakehouse pipelines for historical data. | Master the PySpark DataFrame API for robust, large-scale data manipulation. |

| Essential Skills | Strong foundation in SQL, Python, PySpark, SparkSQL, and Workflows. | Commit to mastering the foundational SQL and Python concepts first before moving to Databricks. |

✨ The path to becoming a proficient Databricks Data Engineer is structured and rewarding. It requires a clear, step-by-step approach—from mastering SQL and Python to deep-diving into Spark, PySpark, and the Lakehouse architecture. The 75 concepts detailed in the training roadmap are a testament to the comprehensive skill set required for success. Trust the process, commit to the hands-on practice, and you will unlock your career potential in the cloud data domain.

Ready to start your journey? Sign up for a live demo and take the next step in securing your high-value tech career!