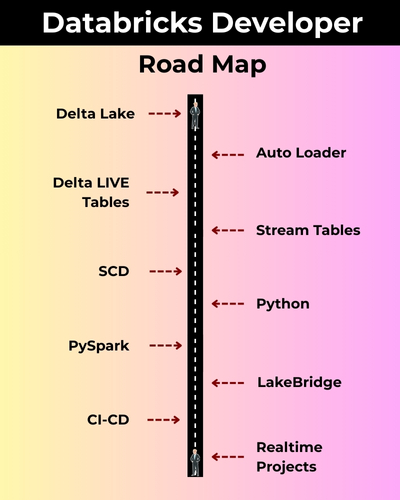

A Databricks Developer is a highly promising job role focused on building scalable data pipelines, transforming raw data into meaningful insights, and enabling advanced analytics with PySpark, Delta Lake, Lakehouse, Workflows, Auto Loader, Big Data and more.. !

✅ Delta Lake, DLT & Auto Loader

✅ Spark, Spark SQL & PySpark

✅ Python, ETL & Pandas

✅ Big Data Analytics

✅ ETL/ELT

✅ WorkFlows, Widgets, Pipelines

✅ Cloud BI, Medallion, more..

✅ Real-Time Projects, Resume

Module 1: SQL Server TSQL (MS SQL) Queries

Ch 1: Data Analyst Job Roles

- Introduction to Data

- Data Analyst Job Roles

- Data Analyst Challenge

- Data and Databases Intro

Ch 2: Database Intro & Installations

- Database Types (OLTP, DWH, ..)

- DBMS: Basics

- SQL Server 2025 Installations

- SSMS Tool Installation

- Server Connections, Authentications

Ch 3: SQL Basics V1 (Commands)

- Creating Databases (GUI)

- Creating Tables, Columns (GUI)

- SQL Basics (DDL, DML, etc..)

- Creating Databases, Tables

- Data Inserts (GUI, SQL)

- Basic SELECT Queries

Ch 4: SQL Basics V2 (Commands, Operators)

- DDL : Create, Alter, Drop, Add, modify, etc..

- DML: Insert, Update, Delete, select into, etc..

- DQL: Fetch, Insert… Select, etc..

- SQL Operations: LIKE, BETWEEN, IN, etc..

- Special Operators

Ch 5: Data Types

- Integer Data Types

- Character, MAX Data Types

- Decimal & Money Data Types

- Boolean & Binary Data Types

- Date and Time Data Types

- SQL_Variant Type, Variables

Ch 6: Excel Data Imports

- Data Imports with Excel

- SQL Native Client

- Order By: Asc, Desc

- Order By with WHERE

- TOP & OFFSET

- UNION, UNION ALL

Ch 7: Schemas & Batches

- Schemas: Creation, Usage

- Schemas & Table Grouping

- Real-world Banking Database

- 2 Part, 3 Part & 4 Part Naming

- Batch Concept & “Go” Command

Ch 8: Constraints, Keys & RDBMS – Level 1

Null, Not Null Constraints

Unique Key Constraint

Primary Key Constraint

Foreign Key & References

Default Constraint & Usage

DB Diagrams & ER Models

Ch 9: Normal Forms & RDBMS – Level 2

- Normal Forms: 1 NF, 2 NF

- 3 NF, BCNF and 4 NF

- Adding PK to Tables

- Adding FK to Tables

- Cascading Keys

- Self Referencing Keys

- Database Diagrams

Ch 10: Joins & Queries

- Joins: Table Comparisons

- Inner Joins & Matching Data

- Outer Joins: LEFT, RIGHT

- Full Outer Joins & Aliases

- Cross Join & Table Combination

- Joining more than 2 tables

Ch 11: Views & RLS

- Views: Realtime Usage

- Storing SELECT in Views

- DML, SELECT with Views

- RLS: Row Level Security

- WITH CHECK OPTION

- Important System Views

Ch 12: Stored Procedures

- Stored Procedures: Realtime Use

- Parameters Concept with SPs

- Procedures with SELECT

- System Stored Procedures

- Metadata Access with SPs

- SP Recompilations

- Stored Procedures, Tuning

Ch 13: User Defined Functions

- Using Functions in MSSQL

- Scalar Functions in Real-world

- Inline & Multiline Functions

- Parameterized Queries

- Date & Time Functions

- String Functions & Queries

- Aggregated Functions & Usage

Ch 14: Triggers & Automations

- Need for Triggers in Real-world

- DDL & DML Triggers

- For / After Triggers

- Instead Of Triggers

- Memory Tables with Triggers

- Disabling DMLs & Triggers

Ch 15: Transactions & ACID

- Transaction Concepts in OLTP

- Auto Commit Transaction

- Explicit Transactions

- COMMIT, ROLLBACK

- Checkpoint & Logging

- Lock Hints & Query Blockin

- READPAST, LOCKHINT

Ch 16: CTEs & Tuning

- Common Table Expression

- Creating and Using CTEs

- CTEs, In-Memory Processing

- Using CTEs for DML Operations

- Using CTEs for Tuning

- CTEs: Duplicate Row Deletion

Ch 17: Indexes Basics, Tuning

- Indexes & Tuning

- Clustered Index, Primary Key

- Non Clustered Index & Unique

- Creating Indexes Manually

- Composite Keys, Query Optimizer

- Composite Indexes & Usage

Ch 18: Group By Queries

- Group By, Distinct Keywords

- GROUP BY, HAVING

- Cube( ) and Rollup( )

- Sub Totals & Grand Totals

- Grouping( ) & Usage

- Group By with UNION

- Group By with UNION ALL

Ch 19: Joins with Group By

- Joins with Group By

- 3 Table, 4 Table Joins

- Join Queries with Aliases

- Join Queries & WHERE

- Join Queries & Group By

- Joins with Sub Queries

- Query Execution Order

Ch 20: Sub Queries

- Sub Queries Concept

- Sub Queries & Aggregations

- Joins with Sub Queries

- Sub Queries with Aliases

- Sub Queries, Joins, Where

- Correlated Queries

Ch 21: Cursors & Fetch

- Cursors: Realtime Usage

- Local & Global Cursors

- Scroll & Forward Only Cursors

- Static & Dynamic Cursors

- Fetch, Absolute Cursors

Ch 22: Window Functions, CASE

- IIF Function and Usage

- CASE Statement Usage

- Window Functions (Rank)

- Row_Number( )

- Rank( ), DenseRank( )

- Partition By & Order By

Ch 23: Merge(Upsert) & CASE, IIF

- Merge Statement

- Upsert Operations with Merge

- Matched and Not Matched

- IIF & CASE Statements

- Merge Statement inside SPs

- Merge with OLTP & DWH

Module 2: Databricks

Ch 1: Databricks Introduction

- Cloud ETL, DWH

- Cloud Computing

- Databricks Concepts

- Big Data in Cloud

Ch 2: Databricks Architecture

- Unity Catalog, Volume

- Spark Clusters

- Apache Spark and Databricks

- Apache Spark Ecosystem

- Compute Operations

- Hadoop, MapReduce, Apache Spark

Ch 3: Unity Catalog

- Unity Catalog Concepts

- Workspace Objects

- Databricks Notebooks

- Databricks Workspace UI

- Organizing Workspace Objects

- Creating Volumes

- Spark Table Creations

- UI : Limitations

Ch 4: Unity Catalog Operations, Spark SQL – 1

- Spark SQL Notebooks

- Creating Catalog

- Creating Schemas, Tables

- Spark Data Types

- Data Partitioning

- Managed Tables

- SQL Queries with the PySpark API

- Union, Views in Spark

- Dropping Objects

- External Tables, External Volumes

- Spark SQL Notebooks: Exports, Clone

Ch 5: SparkSQL Notebooks – 2

- Math, Sort Functions

- String, DateTime Functions

- Conditional Statements

- SQL Expressions with expr()

- Volume for our Data Assets

- File Formats, Schema Inference

- Spark SQL Aggregations

Ch 6: Python Concepts – 1

- Python Introduction

- Python Versions

- Python Implementations

- Python in Spark (PySpark)

- Python Print()

- Single, Multiline Statements

Ch 7: Python Concepts – 2

- Python Data Types

- Integer / Int Data Types

- Float, String Data Types

- Arithmetic, Assignment Ops

- Comparison Operators

- Operator Precedence

- If … Else Statement

- Short Hand If, OR, AND

- ELIF and ELSE IF Statements

Ch 8: Python Concepts – 3

- Python Lists

- List Items, Indexes

- Python Dictionaries

- Tables Versus Dictionaries

- Python Modules & Pandas

- import pandas.DataFrame

- Pandas Series, arrays

- Indexes, Indexed Lists

Ch 9: PySpark – 1

- Dataframes with SQL DB

- Pandas Dataframes

- Dataframe()

- List Values, Mixed Values

- spark.read.csv()

- spark.read.format()

- Filtering DataFrames

- Grouping your DataFrame

- Pivot your DataFrame

Ch 10: PySpark – 2

- DataFrameReader

- DataFrameWriter Methods

- CSV Data into a DataFrame

- Reading Single Files

- Reading Multiple Files

- Schema with an SQL String

- Schema Programmatically

Ch 11: PySpark – 3

- Writing DataFrames to CSV

- Working with JSON

- Working with ORC

- Working with Parquet

- Working with Delta Lake

- Rendering your DataFrame

- Creating DataFrames from Python Data Structures

Ch 12: PySpark Transformations – 1

- Data Preparation

- Selecting Columns

- Column Transformations

- Renaming Columns

- Changing Data Types

- select() and selectExpr()

- Column Transformations

- withColumn()

Ch 13: PySpark Transformations – 2

- Basic Arithmetic and Math Functions

- String Functions

- Datetime Conversions

- Date and Time Functions

- Joining DataFrames

- Unioning DataFrames

- Joining DataFrames

Ch 14: PySpark Transformations – 3

- Filtering DataFrame Records

- Removing Duplicate Records

- Sorting and Limiting Records

- Filtering Null Values

- Grouping and Aggregating

- Pivoting and Unpivoting

- Conditional Expressions

Ch 15: Medallion Architecture

- Medallion Architecture

- Aggregated Data Loads

- Broze, Silver and Gold

- Temp Views

- Spark Tables (Parquet)

- Work with File, Table Sources

Ch 16: Delta Lake – 1

- Storage Layer

- Delta Table API

- Deleting Records

- Updating Records

- Merging Records

- History and Time Travel

Ch 17: Delta Lake – 2 (SCD)

- Schema Evolution

- Delta Lake Data Files

- Deleting and Updating Records

- Merge Into

- Table Utility Commands

- Exploratory Data Analysis

- Incremental Loads

- Old History Retention

- Delta Transaction Log

Ch 18: Widgets

- Text Widgets

- User Parameters

- Manual Executions

- Lake Bridge

- Databricks BridgeOne

Ch 19: Lake Flow Jobs

- Worksflows & CRON

- Job Compute, Running Tasks

- Python Script Tasks

- Parameters into Notebook Tasks

- Parameters into Python Script Tasks

- Concurrent Executions, Dependencies

- Branching Control with the If-Else Task

Ch 20: Databricks Tuning

- How Spark Optimizes your Code

- Lazy Evaluation

- Explain Plan

- Inspecting Query Performance

- Caching, Data Shuffling

- Broadcast Joins

- When to Partition

- Data Skipping

- Z Ordering

- Liquid Clustering

- Spark Configurations

Ch 21: Version Control & GitHub

- Local Development

- Runtime Compatibility

- Git and GitHub Pre-requisites

- Git and GitHub Basics

- Linking GitHub and Databricks

- Databricks Git Folders

- Project Code to GitHub

- Adding Modules to the Project Code

- Databricks Job Updates, Runs

Ch 22: Spark Structured Streaming

- Streaming Simulator Notebook

- Micro-batch Size

- Schema Inference and Evolution

- Time Based Aggregations and Watermarking

- Writing Streams

- Trigger Intervals

- Delta Table Streaming Reads and Writes

Ch 23: Auto Loader

- Reading Streams with Auto Loader

- Reading a Data Stream

- Manually Cancel your Data Streams

- Writing to a Data Stream

- Workspace Modules

Ch 24: Lake Flow Declarative Pipelines

- Delta LIVE Tables

- Data Generator Notebook

- Pipeline Clusters

- Databricks CLI

- Data Quality Checks

- Streaming Dataset “Simulator”

- Streaming Live Tables

Ch 25: Security: ACLs

- Overview of ACLs

- Adding a New User to our Workspace

- Workspace Access Control

- Cluster Access Control

- Groups

Ch 26: Realtime Project @ Ecommerce / Banking / Sales

- Detailed Project Requirements

- Project Solutions

- Project FAQs

- Project Flow

- Interview Questions & Answers

- Resume Guidance (1:1)

#DataLake #DeltaLake #AutoLoader #DLTTables #Medallion #Workflows #Widgets #DBFS #Spark #SparkSQL #PySpark #SparkCluster #CloudComputing #DatabricksCertifications #DatabricksAssociateEngineer #DatabricksDeveloper

What is the Databricks Developer course and who is it for?

The Databricks Developer course is a complete, job-oriented training program designed for aspiring Data Engineers, Big Data professionals, Python/SQL developers, and anyone planning a career in Databricks or Lakehouse-based ETL systems.

What are the prerequisites to enroll in this course?

No prior programming experience is required. Basic SQL knowledge helps, but the course teaches SQL and Python from the fundamentals before moving into advanced Databricks concepts.

What key skills will I learn in this Databricks Developer training?

You will learn SQL Server (T-SQL), Python for ETL, Databricks Workspace, Spark SQL, PySpark, Delta Lake, Delta Live Tables, Auto Loader, Unity Catalog, Workflows, Streaming, Lakehouse Architecture, and complete real-time project pipelines.

Does the course include real-time projects and hands-on labs?

Yes. The course is 100% hands-on and includes real-time projects in domains such as e-commerce, banking, and sales, with end-to-end ETL pipelines, CI/CD, Delta Lake, and Medallion architecture.

What roles can I apply for after completing the Databricks Developer course?

You can apply for roles like Databricks Developer, Data Engineer, PySpark Developer, ETL Engineer, Azure/AWS Data Engineer, and Lakehouse Engineer.

What are the main modules covered in the course?

The course consists of three modules:

Module 1 – SQL Server & T-SQL

Module 2 – Python for ETL

Module 3 – Databricks (Spark, Delta, Streaming, DLT, UC, Pipelines)

What Databricks technologies will I learn (Delta, DLT, Auto Loader, PySpark)?

You will learn Delta Lake, Delta Live Tables (DLT), Auto Loader, Spark SQL, PySpark, Unity Catalog, Streaming, Workflows, Lake Flow, and Performance Tuning.

How deeply is SQL and Python taught in this program?

SQL is taught from basics to advanced (Joins, Views, SPs, Triggers, CTEs, Window Functions, Merge, Indexing). Python covers data types, functions, loops, exceptions, file handling, Pandas, and ETL transformations.

Does the course include Databricks Workspace, Unity Catalog & Admin concepts?

Yes. You will learn Workspace objects, Unity Catalog Admin, metastore, system tables, securable objects, catalog-schema-volume creation, and access control lists.

Is Databricks Streaming, Auto Loader & Delta Streaming included?

Yes. Structured Streaming, Auto Loader, micro-batch, watermarking, Delta streaming reads/writes, and live streaming pipelines are included.

Is the training 100% hands-on with daily tasks?

Yes. The training includes daily assignments, weekly mock interviews, real-time projects, and end-to-end pipeline tasks.

Will I get access to Databricks Workspace, datasets & notebooks for practice?

Yes. Students receive full access to Databricks Workspace, practice notebooks, datasets, and all required implementation files.

Do I get resume preparation, interview guidance, and mock interviews?

Yes. You get 1:1 resume building, weekly mock interviews, project-based interview questions, and career support until placement.

Do you provide certification guidance for Databricks Data Engineer exams?

Yes. You will receive preparation support for Databricks Certified Data Engineer Associate and Professional certifications.

What is the demand for Databricks Developers in the job market?

Databricks is one of the fastest-growing platforms in the Data Engineering ecosystem, and companies worldwide are rapidly adopting the Lakehouse architecture, creating high demand for skilled developers.

What is the average salary of a Databricks Developer (India / Abroad)?

In India, salaries range from ₹8 LPA to ₹22 LPA. In the USA, salaries range from $95,000 to $170,000 depending on experience and cloud expertise.

Is Spark mandatory to learn Databricks?

Yes, Spark is the core engine behind Databricks. However, the course teaches Spark SQL and PySpark from scratch, so beginners can learn smoothly.

How is Databricks different from Azure Data Factory or Synapse?

Azure Data Factory is an orchestration tool, Synapse is a warehouse/analytics platform, while Databricks is a unified Lakehouse Platform for ETL, ML, BI, and high-performance data pipelines.

Is this course suitable for freshers and non-IT learners?

Yes. The program starts from zero level and gradually moves to advanced ETL and Lakehouse topics, making it suitable for freshers, working professionals, and career switchers.

How do I enroll or attend the Free Demo session?

You can join by contacting the team at +91 96664 40801 or visiting www.sqlschool.com to register for a free live demo session.

What training modes are available for the Databricks Developer course?

The course is available in multiple flexible modes including Live Online Instructor-Led Training, Classroom Training at our institute, and Self-Paced Video Learning. You can choose the mode that best fits your schedule and learning style.

What makes this Databricks training different from other institutes?

This program is taught by real-time industry experts with 20+ years of experience, includes 100% hands-on labs, daily tasks, weekly assessments, real-time case studies, resume building, mock interviews, and complete placement assistance—ensuring practical learning and job readiness.

Placement Partners

SQL SCHOOL

24x7 LIVE Online Server (Lab) with Real-time Databases.

Course includes ONE Real-time Project.

#Top Technologies

Why Choose SQL School

- 100% Real-Time and Practical

- ISO 9001:2008 Certified

- Weekly Mock Interviews

- 24/7 LIVE Server Access

- Realtime Project FAQs

- Course Completion Certificate

- Placement Assistance

- Job Support