- 4.7

As a candidate for this exam, you should have subject matter expertise in designing, creating, and deploying enterprise-scale data analytics solutions.

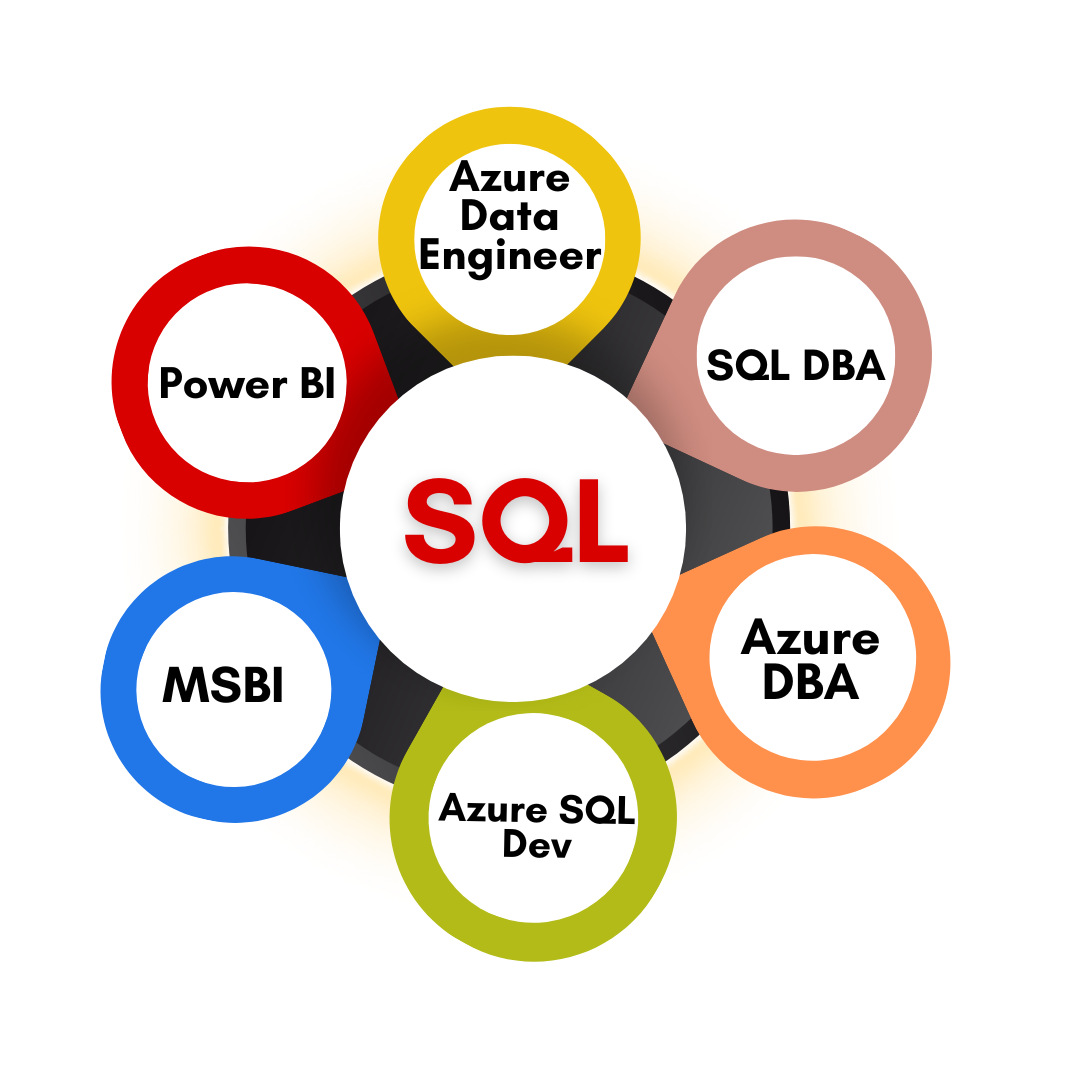

The Microsoft Fabric Analytics Engineer exam focuses on designing and deploying enterprise-scale data analytics solutions. Key skills include data transformation using Fabric components like Lakehouses, Dataflows, Data Pipelines, and Semantic Models. Responsibilities involve implementing best practices, data modeling, Git-based version control, and SQL. Collaboration with roles like Solution Architects and Data Engineers is essential. Core tasks include optimizing performance, building star schemas, and integrating predictive analytics. Expertise in tools like Power BI, DAX, and PySpark is required. The exam measures proficiency in planning analytics environments, serving data, managing semantic models, and conducting exploratory analytics.

SQL SCHOOL

24x7 LIVE Online Server (Lab) with Real-time Databases.

Course includes ONE Real-time Project.

Technical FAQs

Who is SQL School? How far you have been in the training services ?

SQL School is a registered training institute, established in February 2008 at Hyderabad, India. We offer Real-time trainings and projects including Job Support exclusively on Microsoft SQL Server, T-SQL, SQL Server DBA and MSBI (SSIS, SSAS, SSRS) Courses. All our training services are completely practical and real-time.CREDITS of SQL School Training Center

- We are Microsoft Partner. ID# 4338151

- ISO Certified Training Center

- Completely dedicated to Microsoft SQL Server

- All trainings delivered by our Certified Trainers only

- One of the few institutes consistently delivering the trainings for more than 19+ Years online as inhouse

- Real-time projects in

- Healthcare

- Banking

- Insurance

- Retail Sales

- Telecom

- ECommerce

I registered for the Demo but did not get any response?

Make sure you provide all the required information. Upon Approval, you should be receiving an email containing the information on how to join for the demo session. Approval process usually takes minutes to few hours. Please do monitor your spam emails also.

Why you need our Contact Number and Full Name for Demo/Training Registration?

This is to make sure we are connected to the authenticated / trusted attendees as we need to share our Bank Details / Other Payment Information once you are happy with our Training Procedure and demo session. Your contact information is maintained completely confidential as per our Privacy Policy. Payment Receipt(s) and Course Completion Certificate(s) would be furnished with the same details.

What is the Training Registration & Confirmation Process?

Upon submitting demo registration form and attending LIVE demo session, we need to receive your email confirmation on joining for the training. Only then, payment details would be sent and slot would be allocated subject to availability of seats. We have the required tools for ensuring interactivity and quality of our services.

Please Note: Slot Confirmation Subject to Availability Of Seats.

Will you provide the Software required for the Training and Practice?

Yes, during the free demo session itself.

How am I assured quality of the services?

We have been providing the Trainings – Online, Video and Classroom for the last 19+ years – effectively and efficiently for more than 100000 (1 lakh) students and professionals across USA, India, UK, Australia and other countries. We are dedicated to offer realtime and practical project oriented trainings exclusively on SQL Server and related technologies. We do provide 24×7 Lab and Assistance with Job Support – even after the course! To make sure you are gaining confidence on our trainings, participans are requested to attend for a free LIVE demo based on the schedules posted @ Register. Alternatively, participants may request for video demo by mailing us to contact@sqlschool.com Registration process to take place once you are happy with the demo session. Further, payments accepted in installments (via Paypal / Online Banking) to ensure trusted services from SQL School™

YES, We use Enterprise Edition Evaluation Editions (Full Version with complete feature support valid for SIX months) for our trainings. Software and Installation Guidance would be provided for T-SQL, SQL DBA and MSBI / DW courses.

Why Choose SQL School

- 100% Real-Time and Practical

- ISO 9001:2008 Certified

- Concept wise FAQs

- TWO Real-time Case Studies, One Project

- Weekly Mock Interviews

- 24/7 LIVE Server Access

- Realtime Project FAQs

- Course Completion Certificate

- Placement Assistance

- Job Support

- Realtime Project Solution

- MS Certification Guidance